Impact of Artificial Intelligence on Elections

Author

Table of Contents

III. Overview: Artificial Intelligence and Elections

- Prohibition and Disclosure

- Disclosure

- Prohibition

- Moving Forward

- Figure 1: Legislation Enacted on Elections and AI by Type and Penalty (2019-2024)

- Figure 2: Approved and Pending Federal Restrictions on Elections and AI

V. Public Awareness and Individual Responsibility

Media Contact

For general and media inquiries and to book our experts, please contact: pr@rstreet.org.

Executive Summary

Artificial intelligence (AI) is already having an impact on upcoming U.S. elections and other political races around the globe. Much of the public dialogue focuses on AI’s ability to generate and distribute false information, and government officials are responding by proposing rules and regulations aimed at limiting the technology’s potentially negative effects. However, questions remain regarding the constitutionality of these laws, their effectiveness at limiting the impact of election disinformation, and the opportunities the use of AI presents, such as bolstering cybersecurity and improving the efficiency of election administration. While Americans are largely in favor of the government taking action around AI, there is no guarantee that restrictions will curb potential threats.

This paper explores AI impacts on the election information environment, cybersecurity, and election administration to define and assess risks and opportunities. It also evaluates the government’s AI-oriented policy responses to date and assesses the effectiveness of primarily focusing on regulating the use of AI in campaign communications through prohibitions or disclosures. It concludes by offering alternative approaches to increased government-imposed limits, which could empower local election officials to focus on strengthening cyber defenses, build trust with the public as a credible source of election information, and educate voters on the risk of AI-generated disinformation and how to recognize it.

AI technology is here to stay, so it is incumbent that our leaders and citizens adapt to its use without seeking protection via government rules and regulations that are unlikely to achieve their intended purpose.

Introduction

As the technology continues to advance and integrate into all aspects of modern society, AI is already garnering attention for impacting elections around the globe. To date, much of the public dialogue has focused on AI as a threat because of its potential to accelerate the creation and distribution of disinformation and deepfakes. Unsurprisingly, government officials are responding to these concerns by proposing or enacting rules and regulations aimed at limiting AI’s potential negative effects. Similarly, technology companies are outlining detailed agendas for how they plan to mitigate harm from deceptive AI election content.

Importantly, however, AI also holds the potential to bolster the security of an election’s cyber infrastructure and improve the efficiency of election administration. Therefore, rather than proposing additional limits on AI-generated speech, which may run afoul of the First Amendment and would likely do little to curb any threats posed by the technology, federal and state policymakers should empower local election officials to focus on strengthening cyber defenses, build trust with the public as a credible source of election information, and educate voters on the risk of AI-generated disinformation and how to recognize it.

In this paper, we explore the range of AI impacts on elections, both positive and negative, and document the types of policy responses that have been put forward by federal and state government officials. In doing so, we discuss some of the challenges policymakers face in achieving stated policy goals. We finish by discussing the essential role that individual voters, election officials, and candidates need to play in minimizing the potential harms of AI while maximizing its benefits. Ultimately, AI technology is here to stay, and it is vital that our leaders and individual citizens take on the responsibility of adapting to this new reality while setting aside the temptation to seek protection from government rules and regulations that are unlikely to achieve their intended purpose.

AI in Elections

The paper explores AI impacts on the election information environment, cybersecurity, and election administration to define and assess risks and opportunities.

Overview: Artificial Intelligence and Elections

AI refers to the “capability of computer systems or algorithms to imitate intelligent human behavior.” The term dates back to the 1950s, and AI capabilities have grown alongside improvements in computing overall. In recent years, AI sophistication has accelerated rapidly while the costs associated with accessing it have declined.

AI is already integrated into many aspects of the U.S. economy, and many people interact with AI tools in their daily life. For example, banks use AI to assist in a variety of functions including fraud protection and credit underwriting. Email services like Gmail utilize AI to block unwanted spam messages, and ecommerce websites like Amazon use AI to connect individual shoppers with the products they are seeking. Yet, despite its existing integration in the background of modern life, AI recently entered the public consciousness in a more direct way with the release of OpenAI’s ChatGPT, a chatbot that can create written content and produce highly realistic audio recordings, photos, and videos using “generative AI” tools. When AI is used to imitate well-known people, particularly for nefarious purposes, the result is known as a “deepfake.”

From an election perspective, these advances in AI technology are likely to impact the overall information environment, cybersecurity, and administrative processes. Although this emerging technology has garnered attention largely for its risks, as we outline below, there are also significant potential benefits to leveraging AI in our election environment.

Information Environment

An election’s information environment is the most obvious area in which AI could have a potential impact. AI tools can empower users to generate and distribute false information quickly, convincingly, and at a very low cost. The information itself can range from sophisticated deepfake videos created through generative AI programs to simpler text- or image-based disinformation.

In terms of distribution, AI tools can enable bots to flood social media sites with an overwhelming wave of misinformation. AI technology can also be paired with other communication methods such as cell phones to create and deliver targeted deceptive robocalls. This misinformation could be technical, such as lies about changes to voter eligibility or the return deadline for absentee ballots, or political, such as a video of a candidate speech that never actually happened.

Such AI-generated misinformation has already entered our political sphere. During the lead-up to the 2024 New Hampshire Democratic primary, many voters received a robocall message discouraging them to vote from a voice that sounded like it belonged to President Joe Biden. However, investigators determined that the audio recording was generated by AI and commissioned by a supporter of Dean Phillips, one of Biden’s opponents in the primary election.

On the Republican side, a Super PAC supporting Ron DeSantis for president ran a television advertisement featuring language written by Donald Trump in a social media post and read by a voice that sounded like Trump but was generated by AI. Unlike the Biden robocall, the message in the ad accurately represented something communicated by Trump, but the audience was still left with the false impression that Trump had made the statement in a recorded speech.

While each of these examples were quickly identified as deepfakes and were deconstructed extensively in the media, they function as a harbinger of future deceptive campaign tactics, particularly in lower-profile races with fewer resources and dimmer media spotlights. Even in high profile races with narrow margins, the use of such deceptive tactics in a key swing state could have a significant impact, especially considering that false information spreads quickly on social media.

Current responses to this threat are multipronged. In the private sector, major tech companies released an agreement in February 2024 outlining their approach to managing the threat of deceptive AI related to elections. The accord emphasizes voluntary measures to identify and label deceptive AI content and supports efforts to educate the public about AI risks. At the same time, individual companies have taken steps to limit the use of their products or platforms for political purposes altogether.

Meanwhile in the public sector, federal and state lawmakers have introduced new bills and regulations to prohibit or require greater public disclosure when AI is used in political communications. State and local election officials have also mobilized across the country by holding tabletop exercises to practice their responses to various potential disruptions, including AI-generated misinformation.

Cybersecurity

Cyberattacks, which seek to steal confidential information, change information within a system, or shut down the use of a system, have been a longstanding risk to elections. For example, a cyberattack on a voter registration database could increase the risk of identity theft or prevent the timely reporting of results if an election office website is taken offline. Cyberattacks can also be paired with coordinated disinformation campaigns to amplify the consequences of a minor cyber incident to damage public perception around the integrity of the election.

Recognizing this risk, the federal government designated elections as “critical infrastructure” in 2017 and, in 2018, tasked the Cybersecurity and Infrastructure Security Agency (CISA) with oversight of the nation’s election cybersecurity. While cyberattacks, particularly from overseas actors, continue to remain a pressing threat, CISA has recognized that “generative AI capabilities will likely not introduce new risks, but they may amplify existing risks to election infrastructure.”

For example, efforts to access confidential data often include attacks on voter registration databases that contain personally identifiable information such as names, birthdates, addresses, driver’s license numbers, and partial social security numbers. In 2016, hackers gained access to voter registration databases in Arizona through a phishing email to a Secretary of State’s office staffer and in Illinois through a structured query language (SQL) injection—a common technique used to take malicious action against a database through a website. More recently in Washington, D.C., hackers stole voter data and offered it for sale online in October 2023. Similarly, 58,000 voters from Hillsborough County, Florida, had their personal information exposed after an unauthorized user copied files containing voter registration data.

Beyond the theft of personal information, cyber-criminals can disrupt access to a system or website through a distributed denial of service (DDOS) attack. These attacks commonly seek to overwhelm a website, ultimately shutting the website down, by generating high volumes of traffic through the use of bots working in tandem. The Mississippi Secretary of State’s site suffered a DDOS attack on election day in 2022, which prevented public access to the website. While DDOS attacks disrupt the flow of information, they are relatively limited in impact and generally do not affect the voting process or ballot integrity.

Although it is clear that AI has accelerated the sophistication and power of cyberattacks, it is important to recognize that it can also bolster our cyber defenses. The technology can help improve threat detection and remediation, enhance efficiency of the cyber workforce by handling more routine tasks, and strengthen data security. While it may be easier for many election officials to identify the threats raised by emerging technologies like AI, they must also look for appropriate ways to incorporate AI into their cybersecurity strategies.

Even if election offices start to incorporate AI into their cyber defense, a number of existing cybersecurity best practices can help reduce the threat of AI attacks. Multifactor authentication, strong passwords, email authentication protocols, and cybersecurity training for staff can help stop AI-generated phishing and social engineering attacks. According to CISA, “election officials have the power to mitigate against these risks heading into 2024, and many of these mitigations are the same security best practices experts have recommended for years.”

Election Administration

One of the hallmarks of the election system in America is decentralization. Elections are administered at the state and local level, with more than 10,000 election jurisdictions across the country. This design can instill confidence in the process by maintaining a sense of connection between voters and the individuals administering their local election while making it more difficult for bad actors to disrupt elections at scale. At the same time, this approach can also have operational inefficiencies and workforce challenges, some of which could be improved through tools employing AI technology.

One example of where AI could help election administrators is in the tabulation of hand-marked paper ballots. Approximately 95 percent of U.S. voters will cast a paper ballot in 2024, and most of those votes will be cast by filling in a bubble or checking a box with a pen. The rest will use ballot-marking devices where the voter makes the selections on a machine that then generates a paper ballot with the voter’s choices. Both types of paper ballots are then processed through an optical scanner that records the votes.

Invariably, a certain number of hand-marked ballots will be unreadable by the optical scanner for some reason, ranging from physical damage to unclear markings. When this happens, the ballot must be reviewed by election workers and either replicated on a new readable ballot or hand counted, depending on the jurisdiction.

Researchers from three universities are developing a system that uses AI to assist in this process of reviewing hand-marked paper ballots. Specifically, the technology would serve as a check on the primary ballot scanner by identifying ballots for election workers to analyze more closely due to ballot anomalies such as marks outside the typical voting area or bubbles that are lightly filled in. They are also exploring how the technology could help identify fraudulent ballots completed by a single individual.

Signature verification is another process where AI is already helping improve the efficiency of election administration. To confirm that a ballot belongs to the intended voter, many states require signature verification for mail-in ballots, which involves comparing the on-file signature of an individual to the signature on the envelope containing the ballot. This is a time-consuming and labor-intensive process that can be expedited with the assistance of technology that identifies signatures that require additional human review. As of 2020, there were at least 29 counties using AI for this purpose.

An important consideration for the use of AI in these types of election administration tasks is to maintain human touchpoints throughout the process. AI tools are not perfect, so humans must be involved, especially when AI is used to help determine a voter’s eligibility to cast a ballot or whether a mail-in ballot will be counted based on the signature verification. It will also be important for election offices to be transparent with the public about the vendor, how the tool is used, and any plans for addressing problems that arise.

Public record requests provide a final example of AI deployment in election administration, and they succinctly illustrates both the potential risks and benefits of the technology. Since 2020, election offices have experienced an uptick in public record requests. While there are certainly legitimate reasons for seeking access to public documents and encouraging government transparency, there are also ample opportunities to make records requests in bad faith as a way of bogging down the system. Unfortunately, AI could be used to worsen the problem. A bad actor could use AI tools to rapidly generate and disseminate requests across multiple jurisdictions to divert resources in understaffed election offices and disrupt election processes.

Yet, in the same stroke, AI helps increase transparency while keeping local election officials focused on running elections. Local officials can use AI to process records requests and initiate the search for relevant records, ultimately leading to an overall increase in government efficiency and transparency. A number of federal agencies—including the State Department, Justice Department, and Centers for Disease Control and Prevention—are already experimenting with using AI tools to assist in managing and fulfilling public-record requests. Local election offices could benefit from similar AI tools to help manage the influx of requests while maintaining focus on their primary function of administering secure and trustworthy elections.

Policy Responses

In response to advancing AI technology, policymakers at the federal and state level are primarily focused on minimizing the impact of AI-driven election disinformation. The proposals they draft often seek to prohibit the use of AI for deceptive purposes in elections or require disclosure of the use of AI in campaign speech.

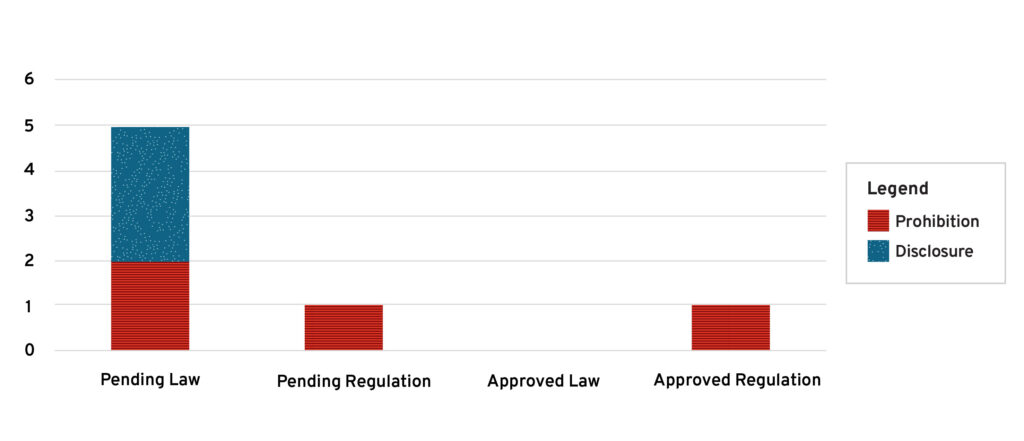

At the federal level, members of Congress have introduced at least five bills aimed at restricting AI in elections and two of these were approved by the Senate Rules committee on May 15. Independent agencies like the Federal Communications Commission (FCC) and Federal Elections Commission (FEC) have also taken or are considering action under their existing regulatory authority. Meanwhile, 17 states now have laws on the books that ban the use of AI in certain election circumstances or establish disclosure requirements.

These legislative moves indicate that there is clearly momentum in favor of continued government action around AI. Public opinion is generally in favor of these actions across party lines. However, questions remain regarding the constitutionality of these laws, as well as their effectiveness at limiting the impact of election disinformation. In the following section, we examine these efforts to minimize AI-driven electoral harms through legislation and regulation that establishes disclosure requirements and prohibitions. We also assess how a less restrictive legislative proposal in Congress and a recent decision by the U.S. Election Assistance Commission (EAC) could provide lessons for an alternative approach that empowers local election officials and emphasizes public education and individual responsibility.

Prohibition and Disclosure

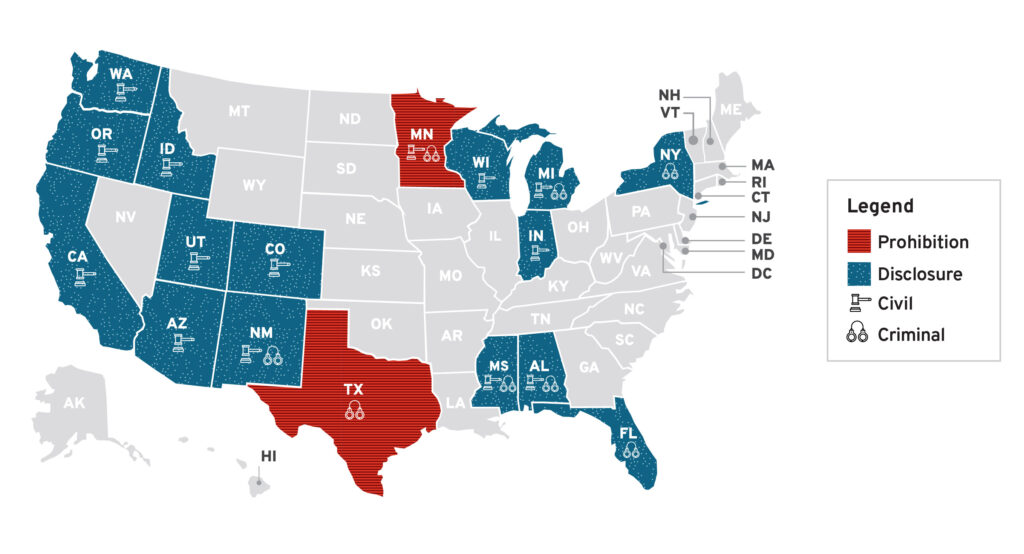

In response to the potential impacts of AI on elections, policymakers have generally proposed or enacted laws and regulations that either ban the use of AI for certain purposes or require a disclosure indicating that AI was used to produce the image, video, or audio used in the election communication. Among the states that have enacted legislation related to AI and elections, requiring a disclosure is the most common approach.

Figure 1: Legislation Enacted on Elections and AI by Type and Penalty (2019-2024)

Source: “Artificial Intelligence (AI) in Elections and Campaigns,” National Conference of State Legislatures, June 3, 2024. https://www.ncsl.org/elections-and-campaigns/artificial-intelligence-ai-in-elections-and-campaigns.

In Washington D.C., Congress and federal agencies are also considering new laws and regulations related to AI and elections, including a mix of prohibitions and disclosure requirements. Two such proposals that have gained some traction in Congress are S. 2770 and S. 3875. Sponsored by Sen. Amy Klobuchar (D-Minn.) and approved by the U.S. Senate Rules committee in May of 2024, these bills seek to minimize the impact of AI-driven election disinformation by through bans and disclosures. At the agency level, the FCC was the only agency so far to fully adopt one of these policy options when it confirmed that an existing ban on deceptive robocalls also applies to AI-generated robocalls.

Figure 2: Approved and Pending Federal Restrictions on Elections and AI

Action Forum, last accessed April 16, 2024. https://www.americanactionforum.org/list-of-proposed-ai-bills-table/?gad_source=1&gclid=CjwKCAjwh4-wBhB3EiwA

eJsppMrPcaPFtrItaEjyIeo8dpXRrQ5Hs9ZK6fUiC9Y5Ijh5jFOJ-Z4pExoCbIoQAvD_BwE; David Garr, “Comment sought on amending regulation to include deliberately

deceptive Artificial Intelligence in campaign ads,” Federal Election Commission, last accessed Aug. 16, 2023. https://www.fec.gov/updates/comments-soughton-amending-regulation-to-include-deliberately-deceptive-artificial-intelligence-in-campaign-ads; Office of Media Relations, “FCC Makes AI Generated Voice in

Robocalls Illegal,” U.S. Federal Communications Commission, last accessed April 11, 2024. https://docs.fcc.gov/public/attachments/DOC-400393A1.pdf

Disclosure

The most common approach to regulation is requiring a disclosure when AI is used to generate content in an election communication. Currently, 15 states have approved legislation requiring a disclosure when AI-generated election content appears in a communication, and three bills are pending in Congress that would require a similar approach, including S 3875.

The disclosure requirements generally apply only if the AI-generated media is materially deceptive and/or intended to influence the outcome of the elections. In some cases, the restriction is limited to a certain time period before the election, such as 60 or 90 days. These parameters narrow the application of the laws so that innocuous uses of AI are not subject to the same restrictions. In terms of enforcement, states impose a mix of civil and criminal penalties, with Michigan imposing the stiffest penalty of up to 5 years in prison for a repeat offender.

The rationale for this type of approach is that the public has a right to know when election communications are incorporating AI-generated content, following a playbook that is similar to the FEC rules requiring disclaimers about the source of funding for campaign advertisements and familiar statements by federal candidates that they “approve the message.” In addition, state governments have their own rules about disclaimers that vary significantly across the country, ranging from highly prescriptive verbiage requirements to more general statements about the sponsor of the communication.

The practical impact of these requirements remains to be seen. Candidate campaigns, political action committees, and other formal organizations are likely to comply with these restrictions as such practices are already part of their standard operating procedures for traditional campaign communications. However, bad actors who seek to confuse voters and disrupt elections are not inclined to follow the rules to begin with, so these additional restrictions are unlikely to have much effect on those groups. There is also a risk of “over labeling” due to broad definitions of what qualifies as AI, which dilutes the effectiveness of disclosures in identifying the deceptive content if a high percentage of election communications choose to utilize the disclosure out of an abundance of caution.

Prohibition

While less common than requiring disclosure, another approach to regulating AI in elections is to prohibit its use under certain circumstances. For example, in 2019, Texas approved a general ban on the creation and distribution of a deepfake with the intent to injure a candidate or influence the outcome within 30 days of the election. Violations are a class A misdemeanor, which can be punished by up to one year in jail and a fine of up to $4,000. In 2023, Minnesota became the second state with such a ban, which applies to the 90 days leading up to the election. An initial violation is punishable by up to 90 days in jail and a fine of $1,000 and increase to five years and $10,000 for a second violation within five years of the first.

Meanwhile, in Washington, D.C., federal agencies are considering how existing statutes can be applied to regulate deceptive election communications generated by AI. For example, the FEC—an independent regulatory agency responsible for administering campaign finance law in federal elections—has initiated a rulemaking that could result in AI-generated communications being regulated under an existing prohibition on “fraudulent misrepresentation of campaign authority.” More specifically, federal campaign finance law currently prohibits candidates and their campaigns from fraudulently misrepresenting themselves as communicating or acting on behalf of an opposing candidate regarding an issue that is damaging to that other candidate. If adopted, the rulemaking could clarify that deepfakes meet the definition of communications that are already prohibited. In addition, S. 2770 introduced legislation that would bypass the FEC and directly prohibit the distribution of materially deceptive, AI-generated media related to a federal election.

The FEC process follows a ruling by the FCC in February that using AI-generated voices in robocalls is illegal. This decision applies to all forms of robocall scams, including those related to elections and campaigns. While much of the media coverage surrounding the decision focused on the agency acting to “ban” or “outlaw” the use of AI in robocalls, the actual decision was a simple confirmation that existing federal regulations apply to AI-generated phone calls.

Moving Forward

Americans are concerned about the impact that AI could have on elections, with around one-half of the population expecting negative consequences for election procedures and a further deterioration of campaign civility. The policy actions from federal and state officials are driven in large part by this public concern. However, questions remain regarding the constitutionality of some of these laws, as well as their effectiveness at achieving the stated policy goals.

For example, laws regulating the use of AI in election communications are establishing a restriction on election speech, which raises questions around violations of the First Amendment. The level of restriction depends on whether the law establishes a ban or a disclosure requirement, and the U.S. Supreme Court has largely accepted the use of disclosures and disclaimers to inform the public about things like sources of funding for campaign advertisements. On the other hand, prohibiting speech based on the technology used to create or disseminate it could be more vulnerable to a constitutional challenge. In the event that these AI laws are successfully challenged, election officials and the general public should be informed and prepared. This is important, regardless, as even if the government restrictions on deceptive AI content are constitutional, there is no guarantee that they will be effective.

Consider the example of the decades-long effort by the FCC to regulate illegal, unwanted robocalls. Such calls have been illegal since 1991 when Congress passed the Telephone Consumer Protection Act (TCPA), which prohibited making calls using an “artificial or prerecorded voice” without the consent of the call recipient. Over the past five years, however, Americans have received, on average, more than 50 billion robocalls per year that violate the TCPA.72 There are various explanations for why the ban is ineffective, ranging from a lack of enforcement authority to outdated definitions. Recent reforms and advances in monitoring technology have the potential to change this trajectory, but the issue remains that prohibitions imposed by the government rarely work as intended.

This example is particularly relevant because the scammers have consistently found a way to evade the rules as they have evolved over the years. Considering the enhanced capabilities of AI, there is no reason to believe that bad actors seeking to disrupt election processes will be any less skilled at navigating the rules than the lower-tech robocall scammers are. As a result, Americans and local election officials should anticipate the coming wave of AI-driven disinformation and focus on preparing to identify and counter the potential impacts.

An example of this alternative approach comes from the U.S. Election Assistance Commission (EAC), a federal commission that helps election officials improve the administration of elections by serving as a national information clearinghouse, setting voluntary election equipment standards, and administering election security grants. The EAC issued a decision in February confirming that certain election security grants funds they administer can be used to help local election officials counter AI-generated disinformation. In comparison to the heavy-handed legislative proposals we discussed previously, the EAC’s lighter touch has promise, particularly as it relates to voter education.

The main strength of the EAC approach is their emphasis on countering— rather than preventing—AI-generated election disinformation. The effectiveness will depend on the specific approach each state takes under this authority, but the most opportunity will likely be found in efforts to educate the public about accurate voting information and procedures.

Another strength of the EAC is that states have the flexibility to innovate. The EAC decision outlines allowable categories of spending, but state and local election officials are in the best position to experiment with different approaches and develop best practices for countering disinformation over time through public education.

While the most effective strategies for dealing with AI will likely emerge from outside Washington, D.C., the federal government can still play a useful role supporting local election officials. For example, in May, the Senate Rules committee approved a third bill alongside the prohibition and disclosure bills. That bill, S 3897, tasks the EAC, in consultation with the National Institute of Standards and Technology (NIST), with developing voluntary guidelines for local election officials that address the uses and risks of AI in various aspects of election administration.

Voluntary guidelines are useful because they provide support to those who need the help while leaving space for individual offices to innovate and explore different strategies for adapting to this new technology. Bill S. 3987, along with the February EAC decision, creates space for this innovation while providing meaningful support to election officials as they adapt to the new reality of administering free and fair elections in the age of AI.

Public Awareness and Individual Responsibility

Despite the efforts of federal and state officials to regulate deceptive AI content, it is still likely to play a role in elections moving forward, but there are specific actions that election officials, individual citizens, and political candidates can take to limit the impact of the deception. In this section, we summarize those actions.

Election Officials

Election officials play an essential role as a source of trusted information about the voting process. They must work to establish this relationship with the public early to build the credibility necessary to counter deceptions that arise closer to Election Day. For example, last November the National Association of Secretaries of State launched an initiative to build trust with the public while individual Secretaries, such as Maggie Toulouse Oliver in New Mexico and Michael Adams in Kentucky, established online resources for countering election rumors.

To support this effort, election officials should leverage the recent decision by the U.S. EAC to deploy security grant funding from the Help America Vote Act for countering election disinformation. The decision by the EAC provides opportunity for innovation at the state and local level to experiment with different approaches to ensuring that the public receives accurate information about voting times, locations, and processes.

On the cybersecurity front, election officials once again play a central role in protecting the election infrastructure by ensuring that they follow best practices and take advantage of federal support from agencies like CISA. For example, election offices can sign up for CISA’s free cyber hygiene vulnerability scanning services to help identify weaknesses or request an in-person cybersecurity assessment.

Basic cybersecurity practices can also go a long way toward defending against sophisticated phishing and social engineering attacks strengthened by AI capabilities, such as utilizing multifactor authentication and keeping software up to date.

Finally, election officials can develop contingency plans and participate in tabletop exercises to practice their response to various scenarios in advance of election day. These events allow election officials to gain experience responding to a range of disruptions and can be tailored to account for AI-driven disinformation or cyber incidents.

Voters and Candidates

Voters need to remain skeptical of information they consume online and anticipate that there will likely be an uptick in deceptive content as Election Day approaches. Fortunately, voters already have a high degree of skepticism regarding election information generated by ChatGPT, so they simply need to maintain this mindset regarding information that flows to them unsolicited.

One example of what this looks like in practice is a tool developed by RAND consisting of a basic three-part framework that urges individuals, when they engage with social media, to consult multiple sources, resist emotional manipulation, and take personal responsibility for not spreading false information.

Civil society groups, the private sector, and media can support voter education and raise public awareness of the risks involved with AI by continuing to draw attention to the issue so that it does not slip out of the public consciousness, while also avoiding the risk of sensationalizing the issue.

Finally, candidates for office have an opportunity to defuse the effects of deceptive AI-generated content by pledging to not use it and urging supporters to not use it. Candidates also need to resist the temptation to claim that unflattering audio or video is an AI deepfake when it is real. Simple decisions by leaders to avoid fanning the flames would go a long way to minimizing the potential impact of false information generated by AI.

Conclusion

AI has the potential to disrupt elections in 2024 and beyond with regard to election infrastructure, election cybersecurity, and election administration. The response to this threat by lawmakers has been a bipartisan rush to pass new rules and regulations aimed at limiting deceptive AI election content. Yet, instead of new rules and regulations, state and federal policymakers, as well as citizens, would be better served by empowering local election officials to focus on strengthening cyber defenses and by educating voters on the risk of AI-generated disinformation and how to recognize it. American elections are decentralized by design, and this approach, which empowers individuals at the local level, provides the best opportunity to truly strengthen American democracy for years to come.

About the Author

Chris McIsaac is a governance fellow at the R Street Institute.